- Software

- Features

-

-

CASE MANAGEMENT

- Work Management

- Work Collaboration

- Email Management

-

DOCUMENTS

- Document Management

- Document Production

-

-

-

FINANCE

- Financial Management

-

EXTENSIBILITY

- Application Integration

-

- Features

- Why us

-

-

WHY CHOOSE SHAREDO?

- Case Studies

- Solution Accelerators

- Security

-

- Pricing

- Resources

- Software

- Features

-

-

CASE MANAGEMENT

- Work Management

- Work Collaboration

- Email Management

-

DOCUMENTS

- Document Management

- Document Production

-

-

-

FINANCE

- Financial Management

-

EXTENSIBILITY

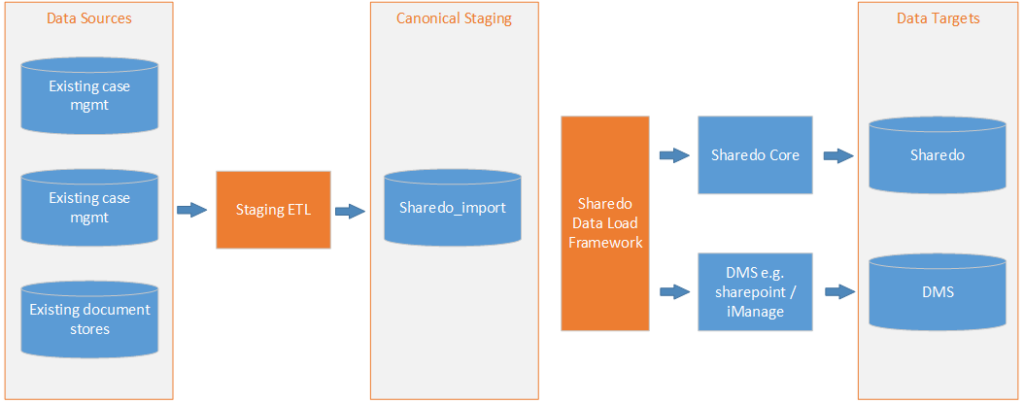

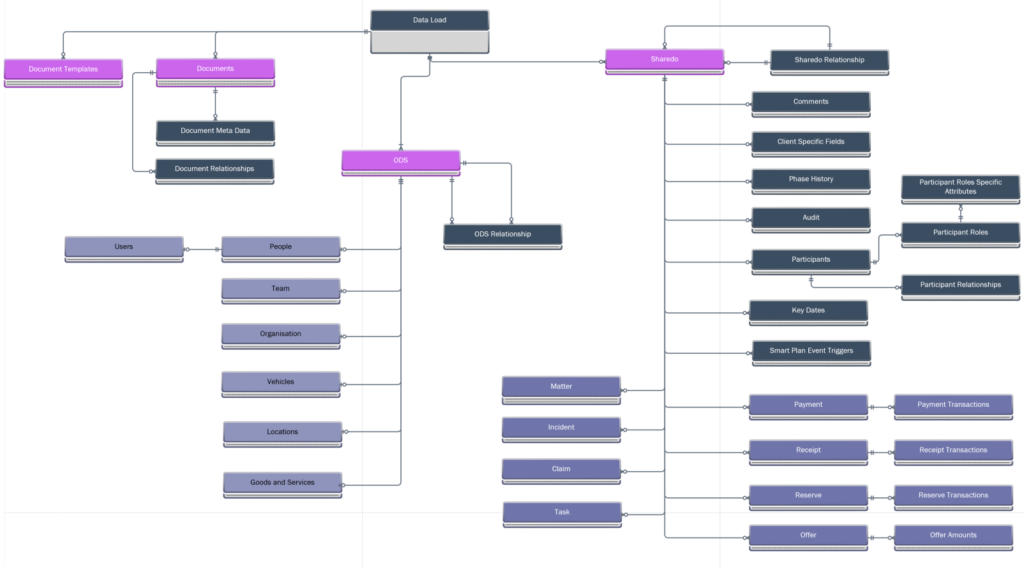

- Application Integration

-

- Features

- Why us

-

-

WHY CHOOSE SHAREDO?

- Case Studies

- Solution Accelerators

- Security

-

- Pricing

- Resources

- Software

- Features

-

-

CASE MANAGEMENT

- Work Management

- Work Collaboration

- Email Management

-

DOCUMENTS

- Document Management

- Document Production

-

-

-

FINANCE

- Financial Management

-

EXTENSIBILITY

- Application Integration

-

- Features

- Why us

-

-

WHY CHOOSE SHAREDO?

- Case Studies

- Solution Accelerators

- Security

-

- Pricing

- Resources